I signed off my Clojure 2011 Year in Review with the words You ain’t seen nothing yet. Coming back for 2012, all I can think of is Wow, what a year! I’m happy to say that Clojure in 2012 exceeded even my wildest dreams.

2012 was the year when Clojure grew up. It lost the squeaky voice of adolescence and gained the confident baritone of a professional language. The industry as a whole took notice, and people started making serious commitments to Clojure in both time and money.

There was so much Clojure news in 2012 that I can’t even begin to cover it all. I’m sure I’ve missed scores of important and exciting projects. But here are the ones that came to mind:

Growth & Industry Mindshare

-

The Clojure mailing list has over seven thousand members.

-

Chas Emerick’s State of Clojure Survey got twice as many responses as in 2011.

-

Two new mailing lists spun up, clojure-tools for “Clojure toolsmiths” and clojure-sec for “security issues affecting those building applications with Clojure.”

-

Clojure moved into the “Adopt” section of ThoughtWorks’ Technology Radar in October.

-

Conferences! I hereby dub 2012 the “year of the Clojure conference.” The first ever Clojure/West took place in San Jose in March, and Clojure/conj returned to Raleigh in November. London got into the Clojure conference action with EuroClojure in May and Clojure eXchange in December.

-

O’Reilly got into the Clojure book game with two releases: the massive Clojure Programming by Chas Emerick, Christophe Grand, and Brian Carper; and the pocket-sized ClojureScript: Up and Running by me and Luke VanderHart (see link for a discount code valid until Feb. 1, 2013).

The Language

-

Clojure contributors closed 113 JIRA tickets on the core language (not counting duplicates).

-

Clojure 1.4 introduced tagged literals, and the Extensible Data Notation (edn) began an independent existence, including implementations in Ruby and JavaScript.

-

Clojure 1.5 entered “release candidate” status, bringing the new reducers framework and new threading macros.

Software & Tools

-

The big news, of course, was the release of Datomic, a radical new database from Rich Hickey and Relevance, in March. Codeq, a new way to look at source code repositories, followed in October.

-

Light Table, a new IDE oriented towards Clojure, rocketed to over $300,000 in pledges on Kickstarter and entered the Summer 2012 cohort of YCombinator.

-

Speaking of tooling, what a bounty! Leiningen got a major new version, as did nREPL and tools.namespace. Emacs users finally escaped the Common Lisp SLIME with nrepl.el.

-

Red Hat’s Immutant became the first comprehensive application server for Clojure.

-

ClojureScript One demonstrated techniques for building applications in ClojureScript.

Blogs and ‘Casts

-

Planet Clojure now aggregates over 400 blogs.

-

I laid out some history and motivation for the Clojure contribution process in Clojure/huh? – Clojure’s Governance and How It Got That Way.

-

Chas Emerick’s Mostly Lazy podcast featured Phil Hagelberg, Anthony Grimes, and Chris Houser.

-

Michael Fogus interviewed prominent Clojure community members in his “take 5” series: Anthony Grimes, Jim Crossley, Colin Jones, Daniel Spiewak, Baishampayan Ghose, Kevin Lynagh, William Byrd, Arnoldo Jose Muller-Molina, and Sam Aaron.

-

Craig Andera launched the Relevance podcast, where he interviewed many Clojurists such as Timothy Baldridge, Jason Rudolph, Rich Hickey (twice), me!, Clojure conference organizers, Michael Fogus (twice), Stuart Halloway, Alan Dipert, Aaron Bedra, Brenton Ashworth, and David Liebke.

I have no idea what 2013 is going to bring. But if I were to venture a guess, I’d say it’s going to be a fantastic time to be working in Clojure.

When (Not) to Write a Macro

The Solution in Search of a Problem

A few months ago I wrote an article called Syntactic Pipelines, about a style of programming (in Clojure) in which each function takes and returns a map with similar structure:

(defn subprocess-one [data] (let [{:keys [alpha beta]} data] (-> data (assoc :epsilon (compute-epsilon alpha)) (update-in [:gamma] merge (compute-gamma beta))))) ;; ... (defn large-process [input] (-> input subprocess-one subprocess-two subprocess-three))

In that article, I defined a pair of macros that allow the preceding example to be written like this:

(defpipe subprocess-one [alpha beta] (return (:set :epsilon (compute-epsilon alpha)) (:update :gamma merge (compute-gamma beta)))) (defpipeline large-process subprocess-one subprocess-two subprocess-three)

I wanted to demonstrate the possibilities of using macros to build abstractions out of common syntactic patterns. My example, however, was poorly chosen.

The Problem with the Solution

Every choice we make while programming has an associated cost. In the case of macros, that cost is usually borne by the person reading or maintaining the code.

In the case of defpipe, the poor sap stuck maintaining my code (maybe my future self!) has to know that it defines a function that takes a single map argument, despite the fact that it looks like a function that takes multiple arguments. That’s readily apparent if you read the docstring, but the docstring still has to be read and understood before the code makes sense.

The return macro is even worse. First of all, the fact that return is only usable within defpipe hints at some hidden coupling between the two, which is exactly what it is. Secondly, the word return is commonly understood to mean an immediate exit from a function. Clojure does not support non-tail function returns, and my macro does not add them, so the name return is confusing.

Using return correctly requires that the user first understand the defpipe macro, then understand the “mini language” I have created in the body of return, and also know that return only works in tail position inside of defpipe.

Is it Worth It?

Confusion, lack of clarity, and time spent reading docs: Those are the costs. The benefits are comparatively meager. Using the macros, my example is shorter by a couple of lines, one let, and some destructuring.

In short, the costs outweigh the benefits. Code using the defpipe macro is actually worse than code without the macro because it requires more effort to read. That’s not to say that the pipeline pattern I’ve described isn’t useful: It is. But my macros haven’t improved on that pattern enough to be worth their cost.

That’s the crux of the argument about macros. Whenever you think about writing one, ask yourself, “Is it worth it?” Is the benefit provided by the macro – in brevity, clarity, or power – worth the cost, in time, for you or someone else to understand it later? If the answer is anything but a resounding “yes” then you probably shouldn’t be writing a macro.

Of course, the same question can (and should) be asked of any code we write. Macros are a special case because they are so powerful that the cost of maintaining them is higher than that of “normal” code. Functions and values have semantics that are specified by the language and universally understood; macros can define their own languages. Buyer beware.

I still got some value out of the original post as an intellectual exercise, but it’s not something I’m going to put to use in my production code.

Why I’m Using ClojureScript

Elise Huard wrote about why she’s not using ClojureScript. To quote her essential point, “The browser doesn’t speak clojure, it speaks javascript.”

This is true. But the CPU doesn’t speak Clojure either, or JavaScript. This argument against ClojureScript is similar to arguments made against any high-level language which compiles down to a lower-level representation. Once upon a time, I feel sure, the same argument was made against FORTRAN.

A new high-level language has to overcome a period of skepticism from those who are already comfortable programming in the lower-level representation. A young compiler struggles to produce code as efficient as that hand-optimized by an expert. But compilers tend to get better over time, and some smart folks are working hard on making ClojureScript fast. ClojureScript applications can get the benefit of improvements in the compiler without changing their source code, just as Clojure applications benefit from years of JVM optimizations.

To address Huard’s other points in order:

1. Compiled ClojureScript code is hard to read, therefore hard to debug.

This has not been an issue for me. In development mode (no optimizations, with pretty-printing) ClojureScript compiles to JavaScript which is, in my opinion, fairly readable. Admittedly, I know Clojure much better than I know JavaScript. The greater challenge for me has been working with the highly-dynamic nature of JavaScript execution in the browser. For example, a function called with the wrong number of arguments will not trigger an immediate error. Perhaps ClojureScript can evolve to catch more of these errors at compile time.

2. ClojureScript forces the inclusion of the Google Closure Library.

This is mitigated by the Google Closure Compiler‘s dead-code elimination and aggressive space optimizations. You only pay, in download size, for what you use. For example, jQuery 1.7.2 is 33K, minified and gzipped. Caching doesn’t always save you. “Hello World” in ClojureScript, optimized and gzipped, is 18K.

3. Hand-tuning performance is harder in a higher-level language.

This is true, as per my comments above about high-level languages. Again, this has not been an issue for me, but you can always “drop down” to JavaScript for specialized optimizations.

4. Cross-browser compatibility is hard.

This is, as Huard admits, unavoidable in any language. The Google Closure Libraries help with some of the basics, and ClojureScript libraries such as Domina are evolving to deal with other browser-compatibility issues. You also have the entire world of JavaScript libraries to paper over browser incompatibilities.

* * *

Overall, I think I would agree with Elise Huard when it comes to browser programming “in the small.” If you just want to add some dynamic behavior to an HTML form, then ClojureScript has little advantage over straight JavaScript, jQuery, and whatever other libraries you favor.

What ClojureScript allows you to do is tackle browser-based programming “in the large.” I’ve found it quite rewarding to develop entire applications in ClojureScript, something I would have been reluctant to attempt in JavaScript.

It’s partially a matter of taste and familiarity. Clojure programmers such as myself will likely prefer ClojureScript over JavaScript. Experienced JavaScript programmers will have less to gain — and more work to do, learning a new language — by adopting ClojureScript. JavaScript is indeed “good enough” for a lot of applications, which means ClojureScript has to work even harder to prove its worth. I still believe that ClojureScript has an edge over JavaScript in the long run, but that edge will be less immediately obvious than the advantage that, say, Clojure on the JVM has over Java.

Syntactic Pipelines

Lately I’ve been thinking about Clojure programs written in this “threaded” or “pipelined” style:

(defn large-process [input] (-> input subprocess-one subprocess-two subprocess-three))

If you saw my talk at Clojure/West (video forthcoming) this should look familiar. The value being “threaded” by the -> macro from one subprocess- function to the next is usually a map, and each subprocess can add, remove, or update keys in the map. A typical subprocess function might look something like this:

(defn subprocess-two [data] (let [{:keys [alpha beta]} data] (-> data (assoc :epsilon (compute-epsilon alpha)) (update-in [:gamma] merge (compute-gamma beta)))))

Most subprocess functions, therefore, have a similar structure: they begin by destructuring the input map and end by performing updates to that same map.

This style of programming tends to produce slightly longer code than would be obtained by writing larger functions with let bindings for intermediate values, but it has some advantages. The structure is immediately apparent: someone reading the code can get a high-level overview of what the code does simply by looking at the outer-most function, which, due to the single-pass design of Clojure’s compiler, will always be at the bottom of a file. It’s also easy to insert new functions into the process: as long as they accept and return a map with the same structure, they will not interfere with the existing functions.

The only problem with this code from a readability standpoint is the visual clutter of repeatedly destructuring and updating the same map. (It’s possible to move the destructuring into the function argument vector, but it’s still messy.)

defpipe

What if we could clean up the syntax without changing the behavior? That’s exactly what macros are good for. Here’s a first attempt:

(defmacro defpipe [name argv & body] `(defn ~name [arg#] (let [{:keys ~argv} arg#] ~@body)))

(macroexpand-1 '(defpipe foo [a b c] ...)) ;;=> (clojure.core/defn foo [arg_47_auto] ;; (clojure.core/let [{:keys [a b c]} arg_47_auto] ...))

That doesn’t quite work: we’ve eliminated the :keys destructuring, but lost the original input map.

return

What if we make a second macro specifically for updating the input map?

(def ^:private pipe-arg (gensym "pipeline-argument")) (defmacro defpipe [name argv & body] `(defn ~name [~pipe-arg] (let [{:keys ~argv} ~pipe-arg] ~@body))) (defn- return-clause [spec] (let [[command sym & body] spec] (case command :update `(update-in [~(keyword (name sym))] ~@body) :set `(assoc ~(keyword (name sym)) ~@body) :remove `(dissoc ~(keyword (name sym)) ~@body) body))) (defmacro return [& specs] `(-> ~pipe-arg ~@(map return-clause specs)))

This requires some more explanation. The return macro works in tandem with defpipe, and provides a mini-language for threading the input map through a series of transformations. So it can be used like this:

(defpipe foo [a b] (return (:update a + 10) (:remove b) (:set c a))) ;; which expands to: (defn foo [input] (let [{:keys [a b]} input] (-> input (update-in [:a] + 10) (dissoc :b) (assoc :c a))))

As a fallback, we can put any old expression inside the return, and it will be just as if we had used it in the -> macro. The rest of the code inside defpipe, before return, is a normal function body. The return can appear anywhere inside defpipe, as long as it is in tail position.

The symbol used for the input argument has to be the same in both defpipe and return, so we define it once and use it again. This is safe because that symbol is not exposed anywhere else, and the gensym ensures that it is unique.

defpipeline

Now that we have the defpipe macro, it’s trivial to add another macro for defining the composition of functions created with defpipe:

(defmacro defpipeline [name & body] `(defn ~name [arg#] (-> arg# ~@body)))

This macro does so little that I debated whether or not to include it. The only thing it eliminates is the argument name. But I like the way it expresses intent: a pipeline is purely the composition of defpipe functions.

Further Possibilities

One flaw in the “pipeline” style is that it cannot express conditional logic in the middle of a pipeline. Some might say this is a feature: the whole point of the pipeline is that it defines a single thread of execution. But I’m toying with the idea of adding syntax for predicate dispatch within a pipeline, something like this:

(defpipeline name pipe1 ;; Map signifies a conditional branch: {predicate-a pipe-a predicate-b pipe-b :else pipe-c} ;; Regular pipeline execution follows: pipe2 pipe3)

The Whole Shebang

The complete implementation follows. I’ve added doc strings, metadata, and some helper functions to parse the arguments to defpipe and defpipeline in the same style as defn.

(def ^:private pipe-arg (gensym "pipeline-argument")) (defn- req "Required argument" [pred spec message] (assert (pred (first spec)) (str message " : " (pr-str (first spec)))) [(first spec) (rest spec)]) (defn- opt "Optional argument" [pred spec] (if (pred (first spec)) [(list (first spec)) (rest spec)] [nil spec])) (defmacro defpipeline [name & spec] (let [[docstring spec] (opt string? spec) [attr-map spec] (opt map? spec)] `(defn ~name ~@docstring ~@attr-map [arg#] (-> arg# ~@spec)))) (defmacro defpipe "Defines a function which takes one argument, a map. The params are symbols, which will be bound to values from the map as by :keys destructuring. In any tail position of the body, use the 'return' macro to update and return the input map." [name & spec] {:arglists '([name doc-string? attr-map? [params*] & body])} (let [[docstring spec] (opt string? spec) [attr-map spec] (opt map? spec) [argv spec] (req vector? spec "Should be a vector")] (assert (every? symbol? argv) (str "Should be a vector of symbols : " (pr-str argv))) `(defn ~name ~@docstring ~@attr-map [~pipe-arg] (let [{:keys ~argv} ~pipe-arg] ~@spec)))) (defn- return-clause [spec] (let [[command sym & body] spec] (case command :update `(update-in [~(keyword (name sym))] ~@body) :set `(assoc ~(keyword (name sym)) ~@body) :remove `(dissoc ~(keyword (name sym)) ~@body) body))) (defmacro return "Within the body of the defpipe macro, returns the input argument of the defpipe function. Must be in tail position. The input argument, a map, is threaded through exprs as by the -> macro. Expressions within the 'return' macro may take one of the following forms: (:set key value) ; like (assoc :key value) (:remove key) ; like (dissoc :key) (:update key f args*) ; like (update-in [:key] f args*) Optionally, any other expression may be used: the input map will be inserted as its first argument." [& exprs] `(-> ~pipe-arg ~@(map return-clause exprs)))

And a Made-Up Example

(defpipe setup [] (return ; imagine these come from a database (:set alpha 4) (:set beta 3))) (defpipe compute-step1 [alpha beta] (return (:set delta (+ alpha beta)))) (defpipe compute-step2 [delta] (return (assoc-in [:x :y] 42) ; ordinary function expression (:update delta * 2) (:set gamma (+ delta 100)))) ; uses old value of delta (defpipe respond [alpha beta gamma delta] (println " Alpha is" alpha "\n" "Beta is" beta "\n" "Delta is" delta "\n" "Gamma is" gamma) (return)) ; not strictly necessary, but a good idea (defpipeline compute compute-step1 compute-step2) (defpipeline process-request setup compute respond)

(process-request {})

;; Alpha is 4

;; Beta is 3

;; Delta is 14

;; Gamma is 107

;;=> {:gamma 107, :delta 14, :beta 3, :alpha 4}

Three Kinds of Error

Warning! This post contains strong, New York City-inflected language. If you are discomfited or offended by such language, do not read further …

further …

further …

further …

This is about three categories of software error. I have given them catchy names for purposes of illustration. The three kinds of error are the Fuck-Up, the Oh, Fuck and the What the Fuck?.

One

The Fuck-Up is a simple programmer mistake. In prose writing, it would be called a typo. You misspelled the name of a function or variable. You forgot to include all the arguments to a function. You misplaced a comma, bracket, or semicolon.

Fuck-Up errors are usually caught early in the development process and very soon after they are written. You made a change, and suddenly your program doesn’t work. You look back at what you just wrote and the mistake jumps right out at you.

Statically-typed languages can often catch Fuck-Ups at compile time, but not always. The mistake may be syntactically valid but semantically incorrect, or it may be a literal value such as a string or number which is not checked by the compiler. I find that one of the more insidious Fuck-Ups occurs when I misspell the name of a field, property, or keyword. This is more common in dynamically-typed languages that use literal keywords for property accesses, but even strongly-typed Java APIs sometimes use strings for property names. Compile-time type checkers cannot save you from all your Fuck-Ups.

I’ve occasionally wished for a source code checker that would look at all syntactic tokens in my program and warn me whenever I use a token exactly once: that’s a good candidate for a typo. Editors can help: even without the kind of semantic auto-completion found in Java IDEs, I’ve found I can avoid some misspellings by using auto-completion based solely on other text in the project.

Fuck-Ups become harder to diagnose the longer they go unnoticed. They are particularly dangerous in edge-case code that rarely gets run. The application seems to work until it encounters that unusual path, at which point it fails mysteriously. The failure could be many layers removed from the source line containing the Fuck-Up. This is where rapid feedback cycles and test coverage are helpful.

Two

Said with a mixture of resignation and annoyance, Oh, Fuck names the category of error when a program makes a seemingly-reasonable assumption about the state of the world that turns out not to be true. A file doesn’t exist. There isn’t enough disk space. The network is unreachable. We have wandered off the happy path and stumbled into the wilderness of the unexpected.

Oh, Fuck errors are probably the most common kind to make it past tests, due to positive bias. They’re also the most commonly ignored during development, because they are essentially unrelated to the problem at hand. You don’t care why the file wasn’t there, and it’s not necessarily something you can do anything about. But your code still has to deal with the possibility.

I would venture that most errors which make it through static typing, testing, and QA to surface in front of production users are Oh, Fuck errors. It’s difficult to anticipate everything that could go wrong.

However, I believe that Oh, Fuck errors are often inappropriately categorized as exceptions, because they are not really “exceptional,” i.e. rare. Exceptions are a form of non-local control flow, the last relic of GOTO. Whenever a failed condition causes an Oh, Fuck error, it typically needs to be handled locally, near the code that attempted to act on the condition, not in some distant error handler. Java APIs frequently use exceptions to indicate that an operation failed, but really they’re working around Java’s lack of union types. The return type of an file-read operation, for example, is the union of its normal return value and IOException. You have to handle both cases, but there’s rarely a good reason for the IOException to jump all the way out of the current function stack.

Programming “defensively” is not a bad idea, but filling every function with try/catch clauses is tedious and clutters up the code with non-essential concerns. I would advocate, instead, trying to isolate problem-domain code behind a “defensive” barrier of condition checking. Enumerate all the assumptions your code depends on, then encapsulate it in code which checks those assumptions. Then the problem-domain code can remain concise and free of extraneous error-checking.

Java APIs also frequently use null return values to indicate failure. Every non-primitive Java type declaration is an implicit union with null, but it’s easy to forget this, leading to the dreaded and difficult-to-diagnose NullPointerException. The possibility of a null return value really should be part of the type declaration. For languages which do not support such declarations, rigorous documentation is the only recourse.

Three

Finally, we have the errors that really are exceptional circumstances. You ran out of memory, divided by zero, overflowed an integer. In rare cases, these errors are caused by intermittent hardware failures, making them virtually impossible to reproduce consistently. More commonly, they are caused by emergent properties of the code that you did not anticipate. What the Fuck? errors are almost always encountered in production, when the program is exposed to new circumstances, longer runtimes, or heavier loads than it was ever tested with.

By definition, What the Fuck? errors are those you did not expect. The best you can do is try to ensure that such errors are noticed quickly and are not allowed to compromise the correct behavior of the system. Depending on requirements, this may mean the system should immediately shut down on encountering such an error, or it may mean selectively aborting and restarting the affected sub-processes. In either case, non-local control flow is probably your best hope. What the Fuck? errors are a crisis in your code: forget whatever you were trying to do and concentrate on minimizing the damage. The worst response is to ignore the error and continue as if nothing had happened: the system is in a failed state, and nothing it produces can be trusted.

Conclusion

All errors, even What the Fuck? errors, are ultimately programmer errors. But programmers are human, and software is hard. These categories I’ve named are not the only kinds of errors software can have, nor are they mutually exclusive. What starts as a simple Fuck-Up could trigger an Oh, Fuck that blossoms into a full-blown What the Fuck?.

Be careful out there.

Clojure 2011 Year in Review

A new year is upon us. Before the world ends, let’s take a look back at what 2011 meant for everybody’s favorite programming language:

-

Clojure 1.3.0 was released, bringing better performance to numeric applications, reader syntax for record types, and other enhancements.

-

ClojureScript was unveiled to the world, leading to universal confusion about how to pronounce “Clojure” differently from “Closure.”

-

The second Clojure/conj went off without a hitch but plenty of bang.

-

Logic programming burst into the mindspace of Clojure developers with core.logic.

-

Clojure-contrib was completely restructured to support new libraries, a distributed development model, and an automated release process.

-

ClojureCLR got a new home and recognition as a Clojure sub-project.

-

David Liebke introduced Avout, an extension of Clojure’s software transactional memory model to distributed computing.

-

Clojure project management tools Leiningen and Cake decided to bury the hatchet and join forces for the greater good.

-

Clojure/core started blogging more regularly.

-

Books! The Joy of Clojure and Clojure in Action (both from Manning) hit the bookshelves. The Second Edition of Programming Clojure (Pragmatic) and Clojure Programming (O’Reilly) went into early release.

-

PragPub magazine dedicated their July 2011 issue to Clojure.

-

Chas Emerick released Clojure Atlas, an interactive documentation system.

-

Clojure creator Rich Hickey spoke at Strange Loop and caused a minor tweet-storm.

-

4Clojure entered the world, giving thousands of programmers a new way to kill time and learn Clojure.

At this point, I’ve been typing and looking up links for an hour, so I’m calling it quits. Needless to say, this was a big year for Clojure, and I’m sure there’s a ton of stuff that I missed on this list. Regarding 2012, all I can say is, You ain’t seen nothing yet.

A Hacker’s Christmas Song

I’ll be home for Christmas

To fix your old PC.

Please have Coke and Mountain Dew

And pizza there for me.

Christmas Eve will find me

In Windows Update screens,

I’ll be done by New Year’s

If only in my dreams.

I’ll be home for Christmas

Installing Google Chrome,

Removing malware, cruft, and crud,

And backing up your /home.

Christmas Eve will find me

Swearing at the screen,

Replacing it with Linux

If only in my dreams.

What Is a Program?

What is a program? Is it the source code that a programmer typed? The physical state of the machine on which it is run? Or something more abstract, like the data structures it creates? This is not a purely philosophical question: it has consequences for how programming languages are designed, how development tools work, and what programmers do when we go to work every day.

How much information can we determine about a program without evaluating it? At the high end, we have the Halting Problem: for any Turing-complete programming language, we cannot determine if a given program written in that language will terminate. At the low end, consider an easier problem: can we determine the names of all of the invokable functions/procedures/methods in a given program? In a language in which functions are not first-class entities, such as C or Java, this is generally easy, because every function must have a specific lexical representation in source code. If we can parse the source code, we can find all the functions. At the other extreme, finding all function names in a language like Ruby is nearly impossible in the face of method_missing, define_method, and other “metaprogramming” tricks.

Again, these decisions have consequences. Ruby code is flexible and concise, but it’s hard to parse: ten thousand lines of YACC at last count. I find that documentation generated from Ruby source code tends to be harder to read — and less useful — than JavaDoc.

Here’s another example: can I determine, without evaluating it, what other functions a given function calls? In Java this is easy. In C it’s much harder, because of the preprocessor. To build a complete call graph of a C function, we need to run the preprocessor first, in effect evaluating some of the code. In Ruby — Ha! Looking at a random piece of Ruby code, I sometimes have trouble distinguishing method calls from local variables. Again because of method_missing, I’m not sure it’s even possible to distinguish the two without evaluating the code.

I do not want to argue that method_missing, macros, and other metaprogramming tools in programming languages are bad. But they do make secondary tooling harder. The idea for this blog post came into my head after observing the following Twitter conversation:

Dave Ray: “seesaw.core is 3500 lines of which probably 2500 are docstrings. I wonder if they could be externalized somehow…”

Chas Emerick: “Put your docs in another file you load at the *end* of seesaw.core, w/ a bunch of

(alter-meta! #'f assoc :doc "docs")exprs”Anthony Grimes: “I hate both of your guts for even thinking about doing this.”

Chas Emerick: “Oh! [Anthony] is miffed b/c Marginalia doesn’t load namespaces it’s generating docs for. What was the rationale for that?”

Michael Fogus: “patches welcomed.”

Chas Emerick: “Heh, sure. I’m just wondering if avoiding loading was intentional, i.e. in case someone has a (launch-missiles) top-level.”

(As an aside, it’s hard to link to a Twitter conversation instead of individual tweets.)

Clojure — or any Lisp, really — exemplifies a tension between tools that operate on source code and tools that operate on the running state of a program. For any code-analysis task one might want to automate in Clojure, there are two possible approaches. One is to read the source as a sequence of data structures and analyze it. After all, in a Lisp, code is data. The other approach is to eval the source and analyze the state it generates.

For example, suppose I wanted to determine the names of all invokable functions in a Clojure program. I could write a program that scanned the source looking for forms beginning with defn, or I could evaluate the code and use introspection functions to search for all Vars in all namespaces whose values are functions. Which method is “correct” depends on the goal.

Michael Fogus wrote Marginalia, a documentation tool for Clojure which takes the first approach. Marginalia was inspired by Literate Programming, which advocates writing source code as a human-readable document, so the unevaluated “text” of the program is what it deals with. In contrast, Tom Faulhaber’s Autodoc for Clojure takes the second approach: loading code and then examining namespaces and Vars. Autodoc has to work this way to produce documentation for the core Clojure API: many functions in core.clj are defined before documentation strings are available, so their doc strings are loaded later from separate files. (As an alternative, those core functions could be rewritten in standard syntax after the language has been bootstrapped.)

One of the talking-point features of any Lisp is “all of the language, all of the time.” All features of the language are always available in any context: macros give access to the entire runtime of the language at compile time, and eval gives access to the entire compiler at runtime. These are a powerful tools for developers, but they make both the compiler and the runtime more complicated. ClojureScript, on the other hand, does not have eval and its macros are written in a different language (Clojure). These choices were deliberate because ClojureScript was designed to be compiled into compressed JavaScript to run on resource-constrained platforms, but I think it also made the ClojureScript compiler easier to write.

Chas’s last point about code at the top-level comes up often in discussions around Clojure tooling. The fact that we can write arbitrary code anywhere in a Clojure source file — launch-missiles being the popular example — means we can never be sure that evaluating a source file is free of side effects. But not evaluating the source means we can never be sure that we have an accurate model of what the code does. Welcome back to the Halting Problem.

Furthermore, maintaining all the metadata associated with a dynamic runtime — namespaces, Vars, doc strings, etc. — has measurable costs in performance and (especially) memory. If Clojure is ever to be successful on resource-constrained devices like phones, it will need the ability to produce compiled code that omits most or all of that metadata. At the same time, developers accustomed to the “creature comforts” of modern IDEs will continue to clamor for even more metadata. Fortunately, this isn’t a zero-sum game. ClojureScript proves that Clojure-the-language can work without some of those features, and there have been discussions around making the ClojureScript analyzer more general and implementing targeted build profiles for Clojure. But I don’t think we’ll ever have a perfect resolution of the conflict between tooling and optimization. Programs are ideas, and source code is only one representation among many. To understand a program, one needs to build a mental representation of how it operates. And to do that we’re stuck with the oldest tool we have, our brains.

JDK Version Survey Results

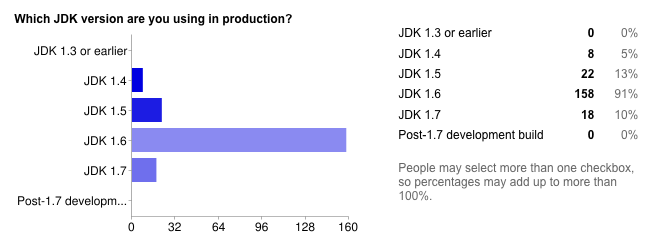

After a month and about 175 responses, here are the results of my JDK Version Usage Survey (now closed):

Versions: Almost everyone uses 1.6. A few are still using 1.5, and a few are trying out 1.7. Only a handful are still on 1.4. Fortunately, no one is on a version older than 1.4.

Reasons: These are more varied. The most common reason for not upgrading is lack of time, with “it just works” running a close second. A little less than half of respondents are limited by external forces: either operations/management or third-party dependencies.

Not much came out in the comments. Banks and other large institutions seem to be the most resistant to upgrades, especially if they’ve been bitten by past JDK changes.

JDK Version Survey

The most recent JDK version poll I could find was from 2008 (thanks, Alex!) so here’s a new one.